Volume Demand Forecasting

The workforce planning forecasting process, like any planning process, is one-part art one-part science. It is an art because sometimes the accuracy of your forecast will be as a result of your judgement and experience. It is a science because there are many step-by-step mathematical processes that can be used to turn raw data into predictions of future events.

There are roughly speaking four approaches to forecasting:

· Using a tool in which advanced mathematical forecasting methods are implemented assist with data-gathering, data exploration, incorporation of causal factors and automated best fit modelling and advanced machine learning techniques such as Facebook Prophet, Amazon forecast’s DeepAR and long short-term memory (LSTM) neural networks.

· Using a simpler but systematic approach that is executed by the forecaster.

· Using a non-systematic approach largely based on human judgement.

· Using a combination of the above three.

I would personally recommend using an approach that combines all three of first three approaches together. However, this can take time to build and generally speaking simple mathematical methods often outperform both more sophisticated methods and human judgement when compared on a like for like individual basis.

One of the problems with pure human judgement is that it lacks objectivity, often meaning we are over optimistic. It is also often hard to explain fluctuations in contact volume: fluctuations can have multiple causes, each having an unclear impact. This lack of a clear relation between cause and effect makes it difficult for humans to understand. For these reasons forecasting purely on human judgements should be reserved for situations where there is no historical data (such as a new product) or otherwise be avoided.

On the other hand, relying completely on a computerised system won’t work either as every forecasting method has to be able to identify special events, interpret business changes, and so forth which without human interventions are near impossible to achieve successfully and consistently. It is this human intervention makes it important that forecasters understand the consequences of their interaction with the system and can disqualify some of the more advanced black box-type forecasting methods for practical use.

No matter what tool a forecaster might have at their disposal, it is critical for the forecaster to understand these calculations in order to quality check the inputs/outputs as well as being able to have an educated discussion with business leaders. After all, if you are not able to explain how you came up with the forecast in the first place how do you expect operations to buy-in and support your plan…

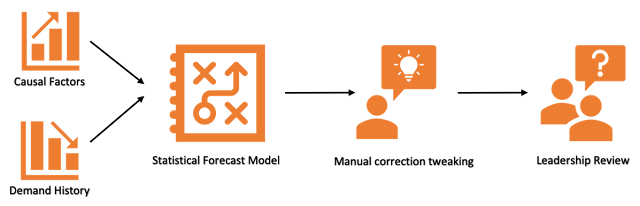

There are four main forecasting components typically used for Customer Operations forecasting:

- Time-Series (demand history)– a method based solely on past history in order to extrapolate forward. If you want to know more about this method I have written a article on the subject (click here to read that)

- Cause & Effect (Causal) – a method best suited for situations with regularly characterised ups and downs due to causal factors or drivers.

- Guesswork and manual correction tweaking for special event – yes sometimes when there is a lack of accurate data gut feel is all you have. You also should be looking out for future events that might affect the level, trend & seasonality of your forecast vs history.

- Leadership Review – incorporation of various process subject expert matter views to align the variables of the forecast to business direction.

Now let’s look at the 6 typical forecasting process steps that usually occur.

1) Define the Characteristics

Before you even gather data to feed a forecasting model you should sit down and map out the characteristics of the object you looking to forecast. You do this to ensure that as you proceed with later steps you have something to guide you on what data you might need, how accurate a forecast of this object is likely to be and what forecasting model/method is most appropriate.

There are many characteristics you might consider but some common ones to are:

· Determine the granularity – at what level of granularity do you intend this forecast to provide e.g. monthly, weekly, daily, or intra-day down to minute level.

· Determine the time frame – whether the forecast is made for a week, a month, three months, six months, one year or more.

· Growth – is this an established market and product, with a steady increase in forecast growth, or is a relatively new and thus likely to be openly volatile full of unpredictability.

· Seasonality – are there certain times of the year you are likely to see larger volume than others?

· Customer sensitivity – when forecasting demand for say customer contact for the national lottery you are likely to see much larger surges in volatile and short-notice demand (say when there is a prize roll-over) than you would say for a Banking service.

· Customer Self-Serve System/Process Generated Volatility – How reliable and mature are the systems and processes that support your customers self-serve options.

· Upcoming changes –will your business be making adjustments to the product to improve the proposition or align with new regulations? Knowing of these changes in advance allows you to adjust your forecasting process accordingly.

2) Data Gathering & Cleansing

The source and availability of your data of course depends upon how much history exists (if it is a new product then no history may yet exist) your technological set-up and the type of customer channel you are forecasting e.g. phone, live-chat, email, back-office, retail footfall, manufacturing units ect.

If no data is yet available, the information must come from the judgments made by experts in their field. If the forecast is based solely on judgment and no actual data, we are in the field of qualitative forecasting – a subject for another day.

The data gathering stage involves identifying what data is needed and what data is available. Additionally, different types of patterns can be observed in the available data sets, with it important to identify these patterns in order to select the correct forecasting model later.

It is also important to think data quality at this point and spend time cleansing known outliers. An outlier being any data point that falls outside of the expected range of the data. Ignore outliers at your peril, they will have a significant adverse impact upon the accuracy of your forecast. As any stockbroker will tell you history is not necessarily a true reflection of the future. So, make sure you look out for abnormally low or high numbers, missing information and trends that you know will not repeat.

3) Select the forecasting model or combination of methods.

In this step, the forecaster must decide the method(s) or model(s) of forecasting which will be used. There are many methods of forecasting of both the qualitative and quantitative types. In the quantitative section these typically fall under the categories of Time-Series of casual demand drivers whilst for qualitative methods there are techniques such as Nominal Group and Delphi methods.

4) Build and test the forecasting model

In this step, the forecaster uses a part of the available data to build a forecasting model. A model is a statistical or mathematical formula. He uses the other part of the data to test the model. That is, he will apply the formula and see whether it gives an accurate answer or not. If not, he will make necessary changes to the formula until he gets satisfactory results.

5) Refine for Special Events

There are an infinite number of potential events that might disrupt a forecast pattern, especially as the granularity of forecast interval drops down ie. say at 15-minute interval. Picking your battles is important however, allowing for obvious changes in customer demand behaviour is critical. For example, a major event like the super bowl or the world cup final is likely to mean less customer demand during this period, but more subtle special events might also include the changing of the clocks or student term time schedules.

6) Compare events with the forecasts

At times getting to an accurate forecast can be difficult, especially when there are some who believe you possess a crystal globe – so for those frustrated forecasters out there… the first rule of forecasting is that all forecasts are wrong (it’s impossible to be 100% correct 100% of the time) – forecasting is like trying to drive a car blindfolded and following directions given by a person who is looking out of the back window. However, failing to learn from when your forecasting is wrong or lucky makes it a lot less likely forecasting accuracy will improve over time. The first and most beneficial purpose of accuracy analysis is to learn from your mistakes after all you can’t manage what you don’t measure.

Responses